In anticipation of our look at gadgets on Tuesday I’m writing this post on how digital media is created stored and played. There’s not enough room to get into full details so this is just a kind of birds eye view. What I’ll cover is how we go from a natural stimulus, like sound or light, to represenation of the stimulus being presented through a deveice like speakers or a monitor. The name we’ll use for this process is the Digital Media Lifecycle.

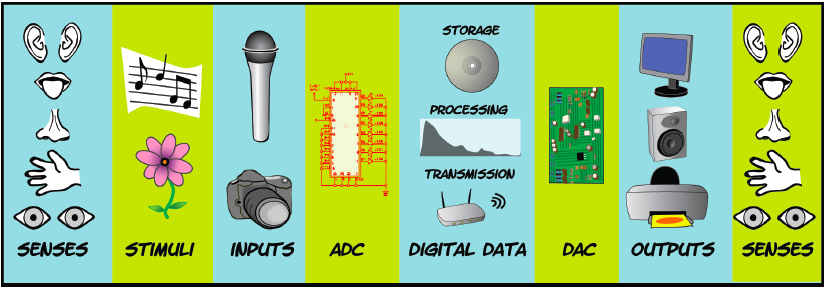

Here is a kind of simple diagram of the process:

And here is a PDF that my colleague Jody Culkin, @jodyc, also a professor in Media Arts & Technology at BMCC, created. Rather she created, she’s an artist, and I helped with the writing.

I’ll run through each phase relatively quickly here.

1. Senses

It all starts with the senses. There are a lot of natural phenomena in the world but when it comes to creating digital media we are only intersted in approximating those that we can sense. Even narrower, most media deals with only two senses, sight and hearing. There is work being done on taste, touch and smell but we will ignore them for now.

Quick refresher here (many of the details left out so forgive me scientists), we see through our eyes which focus light onto the retina. The retina is sensitive (through rods and cones) to certain wavelengths of light and to the intensity of the light. Signals are sent from the cones and rods through the optic nerve to our brains which interpret them as color and brightness.

We hear though our ears which have little pieces (the eardrum and three bones actually) that move when sound waves strike them. These movements translate into signals that are sent through the optic nerve to our brains which interpret them as sound (and all sorts of things related to sound like tone, timbre, and direction by comparing both ears, ). Two important pieces for our purposes today are how loud the sound is and the pitch.

Links:

vision (and add for lasik but compares eye to camera): http://www.lasikplus.com/lasik/how-eye-works

hearing: http://www.deafnessresearch.org.uk/How%20the%20ear%20works+1974.twl

2. Stimuli

So the two stimuli that go along with sight and hearing are light and sound. We will narrow that down further to visible light and sound between 20Hz and 20,000Hz because that is all that humans can sense. (Hz is generic and stands for cycles per second. It can apply to any cyclical or periodical phenomenon. To apply that to sound, we hear sound where the waves are vibrating at 20 to 20,000 times per second, with the faster vibrations sounding higher in pitch). Again, narrowing down for these purposes, we are interested in the wavelength of the light because that is what we see as color and for sound we are interested in amplitude of the sound wave which we hear as loudness and the frequency of the sound waves which we hear as pitch.

Links

sound: http://www.physicsclassroom.com/class/sound/u11l1c.cfm, http://www.fearofphysics.com/Sound/dist.html

light: http://www.physicsclassroom.com/class/light/u12l2a.cfm

3. Inputs

To begin the process of digitization we need instruments, inputs, that are sensitive to light and sound. Starting with sound we have the microphone. Essentially a microphone works very much like the ear. There is a part of it which is very sensitive to sound waves called the diaphragm. When the diaphragm moves its movements are translated into an electrical signal. Some microphones need power to make the diaphragm work and generate the electrical signal. They can use everything from a battery to what is called phantom power on more professional microphones.

Digital cameras, similar to our eyes, have two basic parts to capture light. The first part is the lens which focuses the light and the second part is the sensor. Simplifying things a bit (there are many kinds of sensors) each sensor in a digital camera is actually made up of millions of little sensors. This is what is meant when you hear about megapixels in cameras. The number refers to how many little light sensors the camera has. Each sensor is only sensitive to a particular wavelength of light. A typical example is a sensor where the little sensors are only sensitive to red, green or blue light. In addition to the wavelength the sensors are also sensitive to the amount of light that hits them. So when the shutter opens each little sensor transmits an electronic signal that corresponds to the amount of red, green or blue light that is hitting it. To make up for the fact that each sensor can only sense one wavelength of light they are arrayed in an alternating checkerboard-like pattern.

Before we go on it should be noted that, based on the limitations of the input devices, different (sometimes more, usually less) information is being captured than what humans get from direct exposure to the stimuli.

Links:

Gadgets: http://electronics.howstuffworks.com/electronic-gadgets-channel.htm

digital audio (lots of info):http://www.mediacollege.com/audio/

microphone: http://www.mediacollege.com/audio/microphones/how-microphones-work.html

different types of microphones: http://electronics.howstuffworks.com/gadgets/audio-music/question309.htm

Cameras: http://electronics.howstuffworks.com/cameras-photography-channel.htm, http://www.explainthatstuff.com/insidedigitalcamera.html,

Nice 3 min video on digital camera:http://electronics.howstuffworks.com/cameras-photography/digital/question362.htm

4. Encoding (Sampling and Quantizing)

Now we have an electrical signal from the microphone and one from the sensor array and we need to translate this into something computers can understand, namely binary numbers. To do this some kind of Analog-to-Digital Converter (ADC) is used. This is a computer component and can either be integrated into a device, like on a video camera, or a separate part of a computer, like a sound or video card.

There are two basic steps to the process. The first step is sampling. This is just looking at the electrical signal at a given point. The next step is quantizing, turning the signal at the sampling point into a binary number.

Sound is time based so the samples must be taken at frequent intervals, called the sampling rate. A man named Nyquist figured out that to accurately reproduce a wave you have to sample it at twice the frequency of the wave (basically so you get both the peak and trough of the wave). Because we can hear sound at 20,000Hz the sampling rate needs to be set to at least 40,000Hz. CD quality audio does it at 44,100Hz. Nowadays most systems use even higher sampling rates.

Next is quantizing; turning every sample into a number. Because this is for computers we translate to binary numbers. Binary numbers are just 1’s and 0’s like 100010. Each of those digits is called a bit. To make the process simpler each sample is recorded with the same number of bits. So even if the sample is only the number two (10 in binary), it might be recorded like this 00000010. The number of bits used for each sample is important for two reasons. One is that it sets a hard limit on how many different levels of sound can be recorded, and secondly it determines how much storage each sample will need (more bits more storage). The number of bits used is called bit depth. CD audio records at 16 bits per sample (65,536 different possible sound values).

As expected more bits produces higher fidelity (trueness to original sound) in the digital file, and so does a higher sampling rate (up to a point). You might be interested to know that telephone audio is often around 8,000Hz sampling rate and 8 bits per sample. Which is why you can hear people fine but music sounds awful on the phone.

For light the samples are the measurements from each of the many little sensors. In effect the sensors are sampling the light. In this way you can think of the megapixels like the sampling rate. Again each sample must be quantized. The problem here is that each sensor only gave us information one color (red, green or blue). I’ll skim some detailed explanation that I admittedly don’t fully understand myself to say that mathematical formulas are used to estimate the actual light reading for each sensor based on the reading from the sensor and the sensors around it. The resulting color is qusually quantized by giving values for the amount of red, green and blue light. Each color is given 8 bits for 24 total bits. Higher-end cameras now offer the option to store what is called a raw image file that usually has more bits.

Video cameras do both of the above using a microphone to record audio and image sensors to record a series of pictures.

Links:

Interactive Sampling Rate and Bit Depth explorer (requires Java): http://www.cse.ust.hk/learning_objects/audiorep/aurep.html

Descriptions of Sampling Rate and Bit Depth

http://wiki.audacityteam.org/index.php?title=Sample_Rates

http://wiki.audacityteam.org/index.php?title=Bit_Depth

5. Digital Data

Now that the information has been sampled and quantized we have to store then numbers in a file. Most digital files are basically just all of the quantized numbers with a bit of meta data added to tell the computer what kind of file it is.

Where the trickiness and all of the different file formats come in is usually from compression. Looking back remember that CD audio records 44,100 samples per second. That’s a lot of samples and a lot of data. Compression (whether for sound or images) attempts to reduce the data. Lossless compression reduces the amount of data stored without losing any data and lossy compression throws out some of the data to reduce the size. MP3 is an example of lossy compression that manages to reduce file size to 1/10 or so of CD quality audio. Video is even worse as it has both audio and a bunch of images (usually in the range of 20-60 per second). Pretty much all video you see has been compressed. Getting into file formats fully would take too long but here are some of the common formats for audio images and video.

Audio: wav, aiff, mp3

Images: jpeg, png, gif, tiff (and RAW which is actually a bunch of formats)

Video: avi, mpeg, mov, wmv, m4v (and these don’t include many formats used for DVD, Blu-Ray and various video editing systems)

6. Decoding

When it comes time to play one of these digital media files that have been created we now need to do the reverse process of the ADC and use a Digital to Analog Converter (DAC) to reproduce the analog sound. Like the ADC the DAC can be built into a device or part of a computer. There is a lot of math here and the quality of the algorithms and hardware used in this part of the process can greatly affect the sound.

7. Outputs

The newly analog signal is then sent to something that can turn it back into stimuli we can hear or see. Speakers do this for sound. Basically speakers are just pieces of material that vibrate back and forth in time with the frequency and amplitude of the recorded sound and recreate the original sound waves. Images are sent to monitors that hav little lights that shine and reproduce the light (the monitor resolutions are also measured in pixels).

8. Senses

And finally we see or hear the light and sound again, hopefully still something like it was at the beginning of the whole process.

One Response

Stay in touch with the conversation, subscribe to the RSS feed for comments on this post.

Continuing the Discussion